Automated design intelligence with GAPflow

Overview and benchmarks on GAP8

Recent advances in Deep Learning (DL) have opened new perspectives in many application domains. Now, we are able to embed DL enabled features into everyday objects, e.g. wearable cameras that recognize objects, voice-controlled headsets or even flying sensors monitoring large areas in agricultural applications. At Greenwaves Technologies, we are getting ready for the next technology revolution by building the missing piece of technology: a DL-capable and highly power-constrained engine for battery-operated smart sensors, wearables and hearables with all the associated tools to enable developers to quickly and easily build DL enabled functions into their devices.

Our first product GAP8, in production since the beginning of 2020, is the leading off-the-shelf ultra-low power IoT Application Processor that combines ultra-low energy consumption, low-cost and high-computational power for compute-intensive tasks but still preserving the form-factor, system cost, energy efficiency and flexibility of a typical microcontroller.

Using GAP8 and the associated software tools, computation intensive inference on sensor data, up to now limited to mobile-class devices (e.g. smartphone, raspberry, etc.. ), can move into tiny battery-operated devices, lasting for years on small batteries while carrying out complex context-understanding tasks. A particular domain of these tasks is image classification and recognition where the state of the art relies on DL-based decision functions, typically using efficient NN topologies such as Mobilenets as the feature extractor.

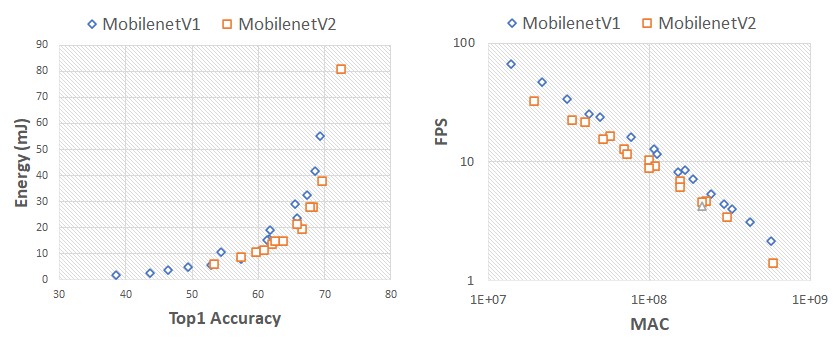

Mobilenets inference on GAP8 (1.2V@175MHz)

The plots above report the energy and latency measurements of the Mobilenet V1 and V2 families running on GAP8. The processing power of GAP8 can scale to cover a broad range of problem sizes (expressed in terms of the number of MACs and parameters). The energy cost increases up to a few tens of mJ as the accuracy (measured on Imagenet) increases, while showing >1 FPS. Application users can find the best operating point for their applications depending on their particular requirements.

Try it on your own

Are you interested in building a DL-based battery-operated application? To enable easy porting of highly efficient DL models to GAP8, we have developed an easy-to-use toolset that is part of our GAP SDK. This allows you to convert DL models from Ttflite format to C code implementing them on GAP8. We even include the GAP platform simulator which allows you to run the examples in simulation on your PC without one of our development boards.

A good way to start is to take a look at our image classification examples. After installing the GAP SDK, which includes all the needed tools (NNtool, GAP AutoTiler), you can run several benchmark networks on the GAP platform simulator, simply by:

$ git clone git@github.com:GreenWaves-Technologies/image_classification_networks.git $ make clean all run platform=gvsoc

The command above will feed a TFLite Mobilenet V1 model to our NN toolset, which we call GAPflow, to generate and run C code implementing the inference network. Multiple models have been already ported to the flow, as listed in the repository.

Inside GAPflow

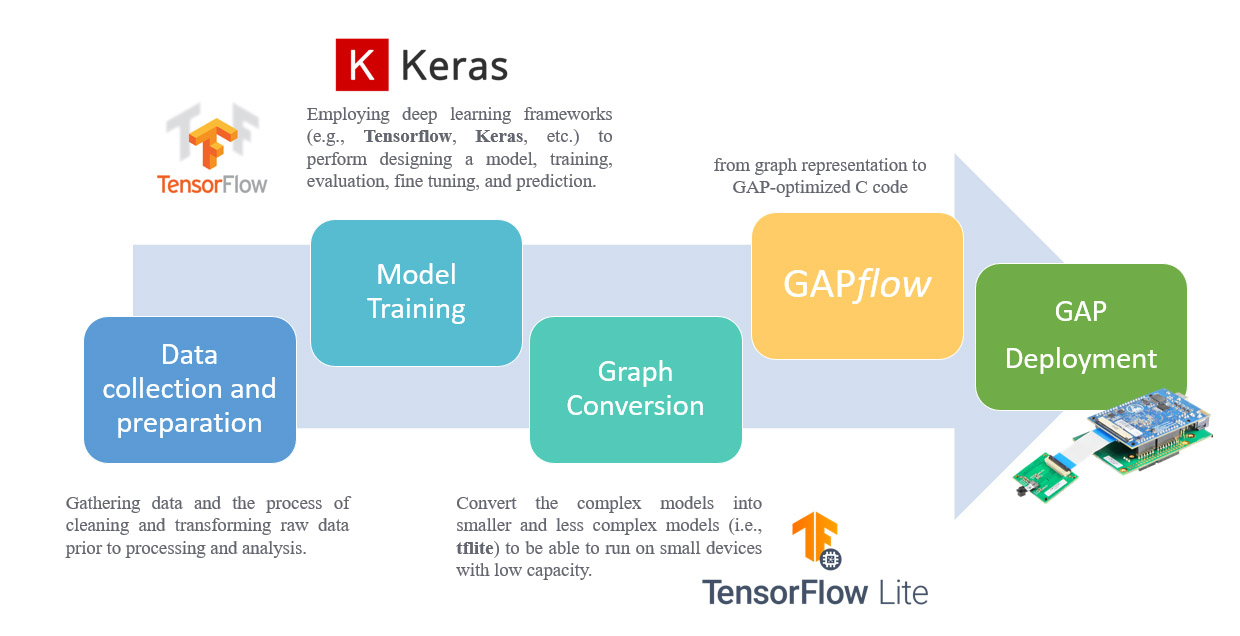

GAPflow accelerates the deployment of NNs on GAP while ensuring high-performance and low-energy consumption on GAP processors.

In fact, extremely energy-efficient hardware is only part of the edge intelligence story. The GAPflow toolset assists programmers in achieving short time-to-prototype of DL-based applications by generating GAP-optimized C code based on the provided DL model.

You can get more, in-depth information on GAPflow from this video tutorial.

GAPflow is the missing piece between the model training process and the deployment on edge devices. It takes a file in TFLite format and produces optimized C code that runs on GAP8.

Thanks to the GAPflow, application developers benefit from an automated, but controllable and inspectable process for importing a NN model file, e.g. a quantized TFlite model (but also full-precision models), and generating C source files with the NN graph primitives tailored for GAP8. Users just need to call the generated functions inside the application code to run deep neural network inferences on sensor data.

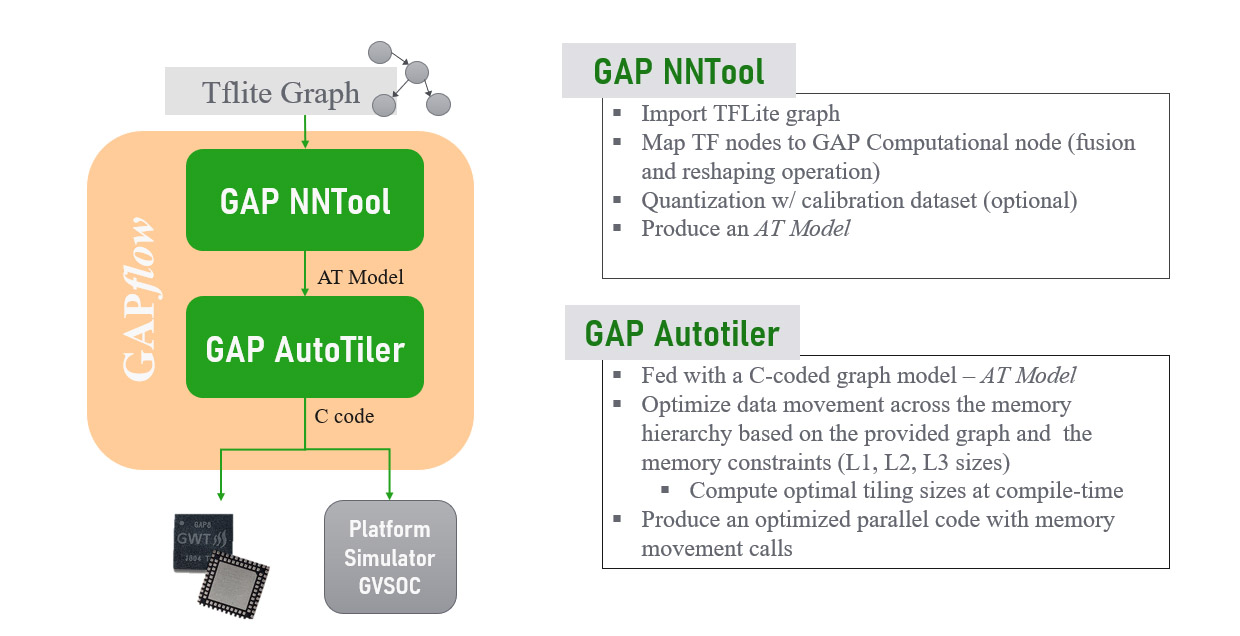

Inside the GAPflow: NNTool and AutoTiler act in combination to ease the developer’s life.

Optimized embedded SW is essential to properly control the embedded processor and achieve maximum energy efficiency from the underlying HW. To achieve this goal, the GWT Autotiler generates the C code tailored for the multi-core GAP architecture given the NN layer characteristics and the graph connections. The code generation process targets high computational efficiency, i.e. high MAC/cycles metrics, by a) making use of fast linear algebra parallel kernels and b) explicitly handling data transfer (and tiling) among on-chip L1 (64kB) and L2 (512kB) and external L3 (both FLASH or RAM) memories. Indeed, signal-processing data access pattern is fully-predictable, hence an explicit management of data movement that prefetches data for the upcoming computation beats a data cached solution not only in terms of power consumption but also in terms of low-latency. The code handling this explicit data movement and calling operation kernels on the cluster cores is entirely generated by the AutoTiler.

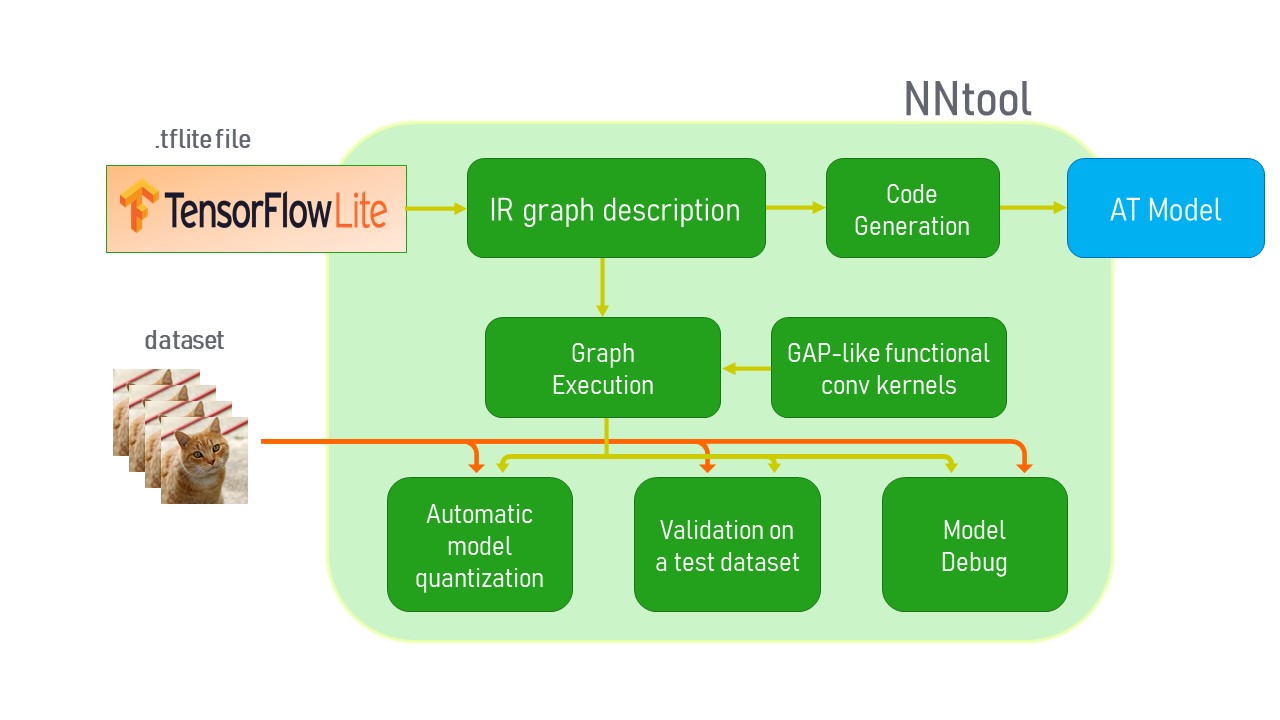

The GAP AutoTiler block in the diagram above is fed with a NN model description — the AutoTiler model (AT Model). The AT Model can be directly written by developers using environments other than TensorFlow. If you are using TensorFlow, GAP NNtool provides a Python-based interface to import a TFLite model and generate the AT Model but also configuring extra-features via Python-based commands (e.g. insertion of layer-wise performance counters). Actually, NNtool can do more than this, thanks to an embedded inference engine that runs GAP-like (python) kernels which replicate the NN functionalities of GAP8. Users can exploit this to “debug” their NN models on their own data, run validation on a calibration dataset or carry out post-training quantization.

Do you want more?

We recently released several reference Deep Learning based projects using GAP8 in our NNMenu GitHub repository. Enjoy and stay tuned for upcoming releases!