Visual Wakewords on GAP8

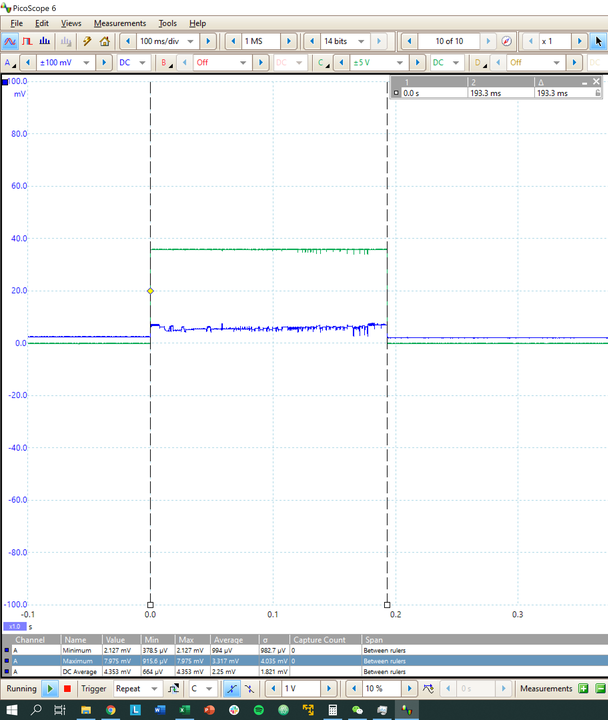

Sample trace of execution of visual wake words network

In the latest GAP SDK, released on the 7th February 2020, we have included a GAPflow example that converts the winner of the Google Visual Wake Words challenge to a working model on GAP8.

Visual Wake Words is Google’s term for a network trained for the binary classification task of detecting the presence or absence of a human in an image. Google provided a python script that relabels the well known MSCOCO dataset, which normally includes multiple classes of object with bounding boxes, with binary person/no person labels.

The winning submission came from a team from MIT who have made quantized and non-quantized versions of their network available on github at: https://github.com/mit-han-lab/VWW

We started with the non-quantized version of the model and ran it through the full GAPflow toolset. First, we converted the protobuf to a non-quantized TFLITE file using the TOCO converter from Google. Next, we used the GAP NNTool to convert the graph to a quantized GAP AutoTiler model using some of the images from the test set to analyse the dynamic of the activations for quantization into int8 containers. Finally, the model is executed, and the GAP AutoTiler produces highly optimized, readable GAP8 C code. This process is entirely automatic but very controllable with several different parameters that can be tuned during the conversion process.

The model takes just 17.7M cycles to run on GAP8. This leads to a minimum energy consumption of 6.05mJ/frame with an execution time for an inference of 193ms and an energy consumption of 7.29mJ/frame with an execution time of 92ms.

You can download the GAP SDK at https://github.com/GreenWaves-Technologies/gap_sdk. The example is located in examples/nntool/visual_wake. It can be run on the GAP8 processor using GAPUINO or on the GAP GVSOC SoC simulator on your PC.