GAP8 performance versus ARM M7 on Embedded CNNs

ARM recently published a new CMSIS library for embedded convolutional neural networks (CNNs) CMSIS-NN. Firstly, it was great to see ARM supporting the market that GreenWaves and GAP8 are focused on. We particularly liked their statement that: “Neural Networks are becoming increasingly popular in always-on IoT edge devices performing data analytics right at the source, reducing latency as well as energy consumption for data communication.”. We definitely share this vision of the market and in fact have been working on making it a reality for the last couple of years. In addition we see the opportunity to do a variety of different types of content understanding on battery powered devices, not just embedded CNNs/DNNs.

We thought it would be interesting to compare their performance figures with GAP8 running the same CNN graph and see how we do. In their benchmarks ARM used an STM32 F7 as their M7 based target. The F7 is manufactured using a 90nm process which penalizes it on power consumption particularly at its maximum speed of 217MHz. To be more fair we’ve compared against power figures for an STM32 H7 processor which has a maximum clock speed of 400MHz but at 217MHz is running well inside its comfort zone from a power consumption perspective. The STM32 H7 is based on the same ARM M7 core so its cycle performance will be very similar to the F7. It is also manufactured using a 40nm process which is more comparable to GAP8 which uses a 55nm LP process from TSMC.

The power consumption figures are both estimates based on data sheets in the case of the STM32 H7 and power estimates in the case of GAP8.

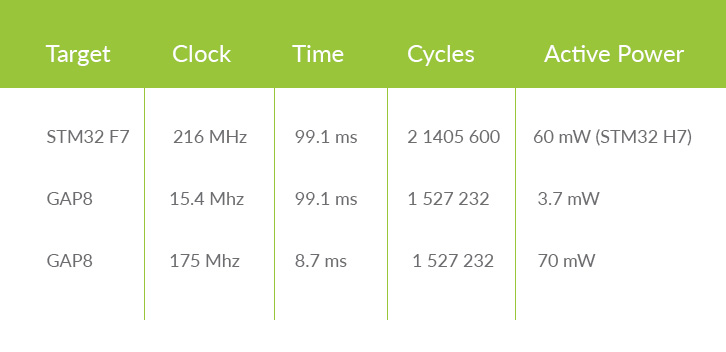

The table below shows the figures from the ARM processor blog post compared against the GAP8 running the same CNN graph on the same inference operation on the 8-core GAP8 processor cluster.

Analysis of embedded CNNs performance

The M7 based STM32 F7 takes 99.1ms at 216Mhz to do the inference on the CNN which is trained on CIFAR-10 images and has a pretty classical graph structure. The weights are all quantized to 8-bits fixed point. This works out at 21.4M cycles. The performance analysis in the ARM document for the number of operations necessary for the inference comes to 24.7MOPS. The M7 is a dual issue architecture but that doesn’t seem to be helping much in this case. Seems like there is room for optimization perhaps …

The GAP8 takes 1.5M cycles to run the same operation. We are not using the Hardware Convolution Engine in GAP8 in this test since we wanted to show how effective GAP8 is as a general purpose compute engine. From our tests we would expect this to improve GAP8‘s performance in power consumption on embedded CNNs by a factor of approximately 3.

Why is GAP8 using so few cycles? Well firstly we’re running on 8 cores and GAP8‘s extremely efficient architecture for parallelization is giving us a speed-up factor of somewhere between 7 and 8 times. Secondly the optimized DSP/SIMD instructions in GAP8 are giving fine grained parallelization on the convolution operations. Finally our fine grained control over memory movement is giving us a real benefit in the amount of cycles used to load and store weights, input and output data from the CNN graph nodes. All of these factors allow us to achieve the same execution time for the inference of 99.1ms at a clock speed of 15.4Mhz. This, in turn, allows us to run the cores at 1V leading to a power consumption during the operation of 3.7mW. Here we are really benefiting from the shared instruction cache in the cluster which decreases the cost of running the 8 cores by fetching instructions only once.

The energy performance of GAP8 on this operation is a 16 times improvement versus the M7 core implemented in the STM32 H7. We’re obviously not saying that we can do everything better than an M7. What we are saying is that for this type of workload we are way more energy efficient so if you want to run a CNN on an MCU class processor you should take a look at GAP8.

The last row in the table shows GAP8 executing the CNN at full clock speed. Here the cluster is working at 1.2V and its maximum clock speed of 175 Mhz. We are able to complete the inference in 8.7ms. A performance increase of 11 times versus the M7 core at a power level that is reasonably similar of 70mW. The energy consumed is obviously less than the M7 since it is over a shorter period but from an energy perspective the GAP8 is less efficient at this operation point.

As we said earlier, using the HWCE would improve the power consumption figures on GAP8 by a factor of between 2 and 3.

This really shows how the cluster on GAP8 performs extremely efficiently on a large parallel data processing task such as embedded CNNs.

More generally we think this shows how being able to innovate in CPU and instruction set architecture can really bring massive benefits when specifically targeting an application space such as embedded CNNs. Our ability to leverage open source is absolutely key in this. We would definitely not exist without it.