|

Example applications

|

|

|

Example applications

|

|

Canny Edge Detection is a popular edge detection algorithm. It is a technique to extract useful structural information from different vision objects and dramatically reduce the amount of data to be processed. It has been widely applied in various computer vision systems.

With this application example, we show how this algorithm can be implemented on GAP8.

Thanks to GAP8's extended instruction set, we can finish a MAC operation (Multiply-Accumulate) of 2 four-vectors in only one cycle. To achieve maximum performance we use this by vectorizing the main operations of algorithm.

For instance, in the first step of the algorithm, which applies a Gaussian Filter (5 * 5) to smooth the image we have used seven 4 element vectors to represent the 5 * 5 filter. Please read the comments in the source code for further information.

Please configure your shell for the SDK by running the GAP command as indicated in the GAP8 SDK Getting Started Guide.

To run the example type:

The result shows you the number of cycles used in each part of the algorithm when running the 8 cluster cores. The example operate on Pedestrian.pgm image and produces img_OUT.ppm which contains the image after canny edge elaboration.

An example of the output is:

With a core frequency of 100MHz this would execute in12ms if all 8 cores are used.

The face detection example included in the GAP8 SDK is based on Viola-Jones face detection algorithm.

Algorithms kernels:

The detector is configured to detect faces from 64x48 px size to 40x30 px size. This is achieved using 3 pyramid layers.

Please configure your shell for the SDK by running the GAP command as indicated in the GAP8 SDK Getting Started Guide.

To run the example type:

The example can run with two input-output configurations:

There are no additional requirements for mode 1, while for mode two the Himax camera must be connected to cpi camera interface and an additional LCD screen must be mounted on the arduino connector. The driver included in this example supports 2.8 TFT LCD Shield based on ili9341 controller.

To select between confiration 1 and 2 a define must be changed within the file main.c. To select configuration 1 (deafult) set macro:

while to select configuration 2:

After changing configuration the project must be rebuilt and run:

To change the input image the following two steps are required.

In the main.c file change the name of the input file:

So far only images of 324x244 size are supported.

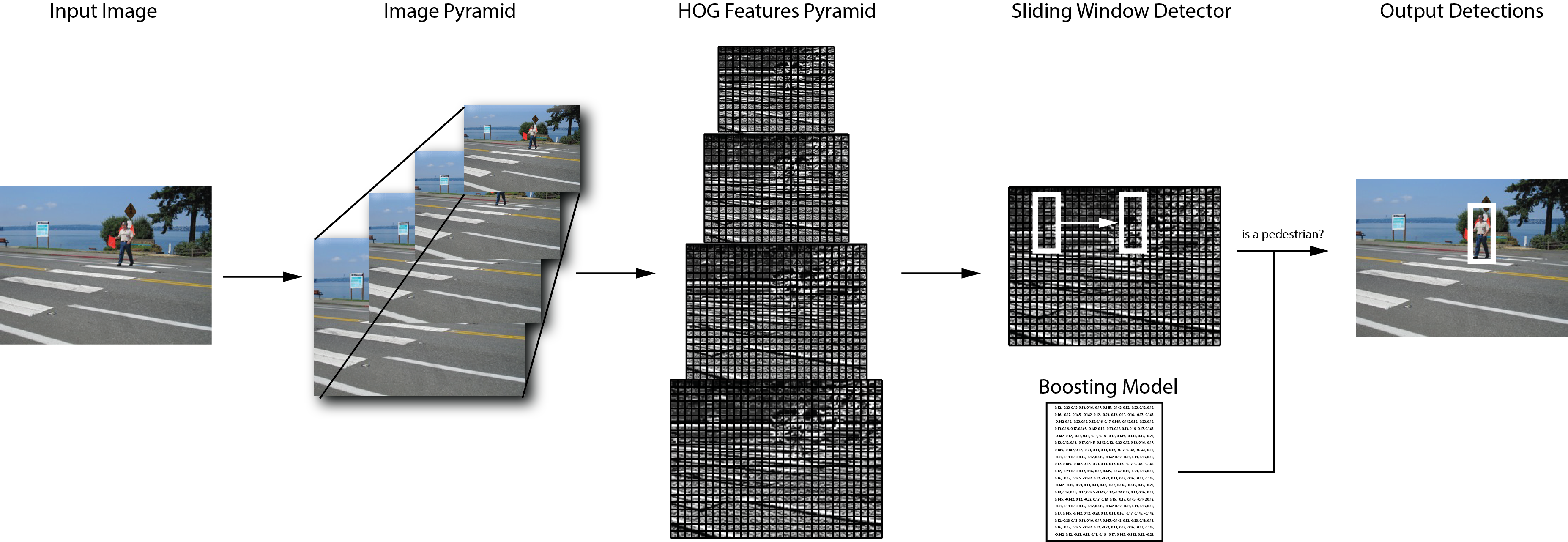

The Pedestrian detection example included in the GAP8 SDK is implemented using a fixed point implementation of Histogram of Oriented Gradients (HOG) (See citation 1 below). The machine learning scheme used to classify HoG descriptor is a window-based boosting technique (See citation 2 below). [Figure 1] shows an algorithm overview.

The diagram shows the main steps of Pedestrian Detection on GAP8. First the image is sub-sampled multiple times to create an pyramid of images. Then on each layer of the pyramid the HOG features are computed. A fixed size sliding windows detector is employed on each of the feature pyramid layers to determine if the features window belongs to a pedestrian or not. The detector compares the feature with a pre-trained model with a fast boosted decision tree scheme.

Histogram of oriented gradients (HOG) is a well known features extractor algorithm. The algorithm is composed of two hierarchical parts. The image is divided in tiles (in this example 8 x 8 pixels) called Cells and then on each cell the Histogram of Gradients is computed on 9 different angles. Thus the histogram has 9 bins.

Once this step is done for each cell, the cells are grouped in blocks (in this example 2 x 2 cells with stride of one cell). For each block the feature is normalized and an output of 36 bins (4 * 9) is produced.

For a more detailed explanation of the algorithm you can refer to these resources:

The sliding windows detector tests for the existence of a pedestrian in each location in the input feature with a given stride. The windows is 128 x 64 pixels which gives a feature size of 3780 values. The machine learning algorithm used to classify pedestrians is based on small boosted trees.

The detector is configured to recognize pedestrian from 96x48 pixel size to 176x88 pixel size. This is achieved using 6 pyramid layers.

Please configure your shell for the SDK by running the GAP command as indicated in the GAP8 SDK Getting Started Guide.

To run the example type:

The example can run with two input-output configurations:

There are no additional requirements for mode 1, while for mode two the Himax camera must be connected to cpi camera interface and an additional LCD screen must be mounted on the arduino connector. The driver included in this example supports 2.8 TFT LCD Shield based on ili9341 controller.

To select between confiration 1 and 2 a define must be changed within the file HoGEstim.c. To select configuration 1:

and to select configuration 2

After changing configuration the project must be rebuilt and run:

To change the input image the following two steps are required.

In the main.c file change the name of the input file:

So far only images of 324x244 size are supported.